To define the Confidence Threshold, we must first define what Confidence is in Predict.

Confidence is a numerical value that is assigned to each Label while Predict is evaluating an Issue. The Confidence value is determined by Predict based on the likelihood that a given Label may be associated with an Issue based on the data available to that Model.

While an Issue is being evaluated by Predict, a Confidence value is assigned to all Labels in the Model. If the highest Confidence value exceeds the Confidence Threshold as defined by your team, then the Label associated with that Confidence value is assigned to the Issue.

Based on the content in the user’s first message, we assign a Confidence value for each Label. For example, you may have Labels for billing, shipping, and delay. Based on how you have trained your Model, Predict may assign the Label of ‘shipping’ to the Issue below based on the user’s first message and the confidence threshold that the content of the message meets.

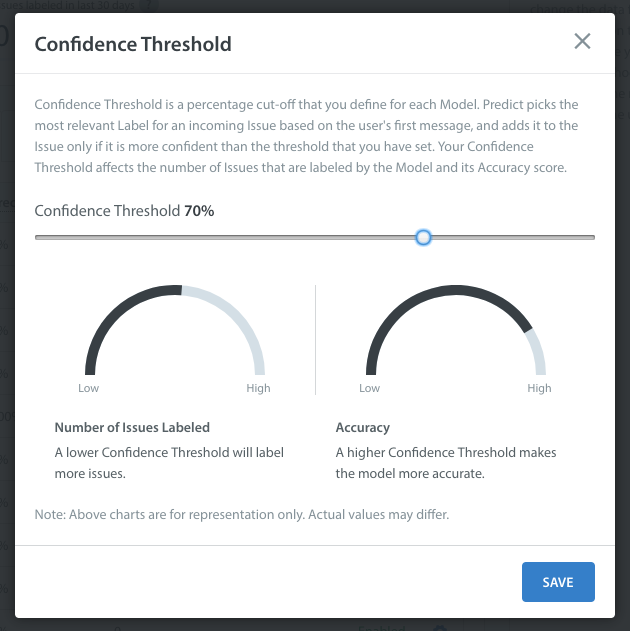

With this in mind, the Confidence Threshold is a value set by the Admins of your team that determines whether or not Predict should assign Labels to Issues where the Confidence value is lower than a certain percentage.

If you want Predict to only assign Labels to Issues where it is very confident that the Issue classification is correct, then you would set a high Confidence Threshold. This will result in less Issues being classified overall, as seen in this graph in the Predict Dashboard.

You can also choose to keep a higher confidence threshold only for some of the Labels for keeping the accuracy higher for those particular Labels. This can help you to define Label related workflows with Bots more accurately.

For the new model, by default, the Labels will have a confidence threshold value of 70%. This value can be changed after the model is created.

Label-level Confidence Threshold values can be updated by selecting ‘Edit Confidence Threshold’ option under the Label settings

You can select any value of Confidence threshold between 0% to 100% for the given Label. You can set different values for different Labels.

Bulk Update Confidence Threshold

You can bulk update confidence threshold values for all the labels.

- Click on the Settings iconfor the model you want to edit.

- Select ‘Bulk Edit Confidence Threshold’ from the dropdown menu.

- Select the new confidence threshold, and click ‘Save’.

This will update the current ‘Confidence Threshold’ values of all the Labels to the new value you selected.

The higher you set your Confidence Threshold, the more accurate that Predict’s labeling will be. However, a higher Confidence Threshold also means that fewer Issues will be labeled by Predict. You need to strike a balance between the number of Issues labeled and the accuracy of the Labels based on your business needs. For this reason, we recommend starting with the default value of 70%, then adjusting it over time as you provide additional data to Predict and integrate it into your existing workflow.